Accelerating into the cloud-native era, the value and role of Intel's hardware and software

In 2010, OpenStack, an open source platform jointly developed by NASA and Rackspace, was born to help service providers and enterprises realize cloud infrastructure services. It brought open source and open thinking to the cloud-native space and opened a new chapter in cloud-native development.

In 2020, the OpenStack Foundation changed its name to the Open Infrastructure Foundation OIF, and OpenStack expanded from "cloud" to "open infrastructure".

Immediately afterwards, OpenStack evolved from Nova for virtualization management and Swift for object storage to a comprehensive collection of open source projects including virtualization management, SDN, SDS service orchestration, and container management. In November 2021, the OIF Foundation announced LOKI, a new standard for open infrastructure - Linux, OpenStack, Kubernetes, and more. The OIF Foundation announced in November 2021 a new standard for open infrastructure, LOKI - open infrastructure management software for Linux, OpenStack, Kubernetes, etc.

In April of this year, OIF released the OpenStack Yoga release and announced that OpenStack will become a production-grade tool for enterprise IT in a stable state when the next Zed release, known as the Terminator, is released. This means that cloud native is gradually entering the post-OpenStack era. Since 2017, major cloud vendors have started to package and provide commercial services for containers one after another, offering commercial service products based on Kubernetes, container technology is gradually becoming mature and standardized and commercialized, becoming the new representative product of virtualization, and the cloud native developed around containers is gradually moving towards the stage of pervasiveness, and already The enterprises that apply containers are undergoing a new round of technical evolution of cloud-native.

Kubernetes in the post-OpenStack era: from "hard to use" to "good to use"

The acceleration of digital transformation has increased enterprise demand for cloud-native, container technology coverage has increased, and IDC predicts that the container software market has exploded in recent years and will continue to grow at a compound rate of more than 40% over the next five years.

In turn, enterprise demand for container management will rise linearly, container management has become the main battlefield for enterprise digital transformation. Gartner predicts that by 2025, 85% of large enterprises in mature economies will be more likely to use container management.

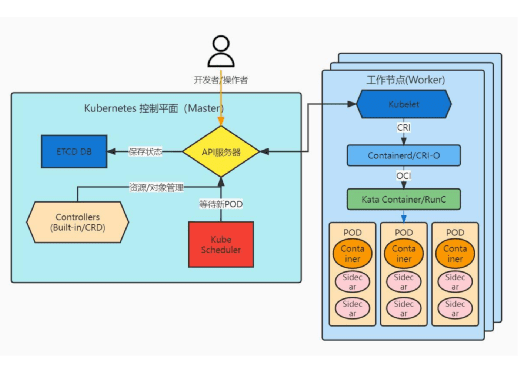

In most enterprise business scenarios today, organizations need to ensure that multiple containers can work together at the same time, and most of this work is done by orchestration engines. With the emergence and evolution of Kubernetes, many of the technical challenges of container orchestration have been overcome.

Perhaps because of the complexity of Kubernetes due to the number of problems it is trying to solve, many enterprises are also applying other container management solutions. However, market data proves that Kubernetes is still the choice of most enterprises, and a recent report by CNCF shows that Kubernetes has nearly 6 million enterprise users worldwide, making it the primary deployment model for applications on the cloud.

Despite the high coverage of Kubernetes, that doesn't mean that users who are already using it are satisfied, and it is often cited as "hard to use but still needed". In the actual use of Kubernetes, we often encounter some "hard to use" problems, such as long container creation time, low throughput/RPS/burst concurrency, slow container scaling, slow cluster scaling, Sidecar resource overhead, low resource utilization, etc. For this reason, Intel has proposed an innovative The development work is mainly focused on Orchestration and Observability.

- Creation of containers based on snapshots + hot code blocks.

- Sharded multi-scheduler.

- Automatic scaling of resilient POD.

- Fast telemetry-based prediction for real-time scaling decisions.

- dynamic insertion / deletion of Sidecar containers in PODs.

- Affinity scheduling/allocation of linked devices (NUMA, GPU+Smart NIC, etc.).

- Real-time "node resource changes" feedback to Kubernetes scheduler.

All of these technologies are compliant with the Kubernetes API specification and compatible with existing APIs, ensuring that users can install and use them without modifying existing Kubernetes code. In order to facilitate users to test and evaluate these technologies, Intel also directly provides container images for users to install and deploy through standard Kubernetes application deployment methods such as Operator.

After solving the container "difficult to use" problem, we must then consider how to "use the good" problem. The prerequisite for "good use" is to choose the right architecture. In the post-OpenStack era, enterprises use cloud-native architecture is the pursuit of agility, elasticity, high performance and efficiency. To achieve these goals, relying solely on software-level optimizations is not enough; in the case of Serverless, for example, many of the problems that arise in deployment, such as function cold starts, need to be addressed through hardware-level optimizations.

As data gradually spreads to edge scenarios, more and more enterprises expect to achieve cloud-edge-end integrated and collaborative infrastructure through cloud-native architecture, and Intel has been making efforts for this purpose, focusing on different needs at different stages of enterprise development and targeting architecture optimization solutions.

Secondly, the widespread demand for AI in the enterprise also poses a challenge to "using it well". Nowadays, almost every application function is inseparable from AI, but AI models face multiple difficulties and challenges in moving from development to production deployment. Generally speaking, AI models need to go through a lot of debugging and testing, and usually take 2-3 days to deploy online; moreover, AI online service computing resources are usually fixed and slow to respond to sudden demand for resources, and face the problem of difficult business expansion.

As a cloud-native core technology, Kubernetes can manage containerized applications on multiple hosts in the cloud platform, and can complete the unified deployment, planning, updating, and maintenance of AI resources, effectively improving the management rate of AI resources. In addition, in the practice of building Kubernetes-based AI development platform, CPU servers can effectively utilize vacant resources and idle time, and allocate them to other applications through Kubernetes' elastic resource scheduling. As a general-purpose computing power provider, CPUs have important advantages in terms of procurement cost and usage difficulty, and can be used not only for AI operations, but also for other application loads.

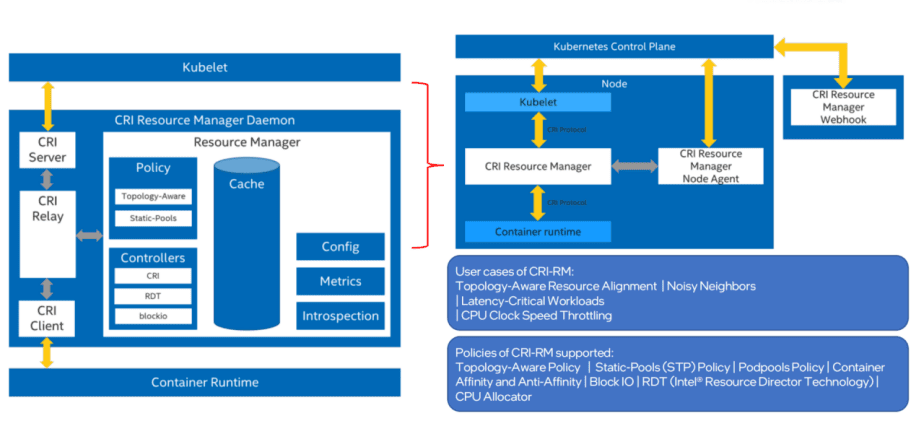

At the beginning of Kubernetes release, the management and allocation of CPU and memory were relatively simple, and with the release of new versions, some new features were added gradually (such as CPU Manager, Topology Manager, etc.), but the default CPU Manager and Topology Manager of Kubernetes still However, the default CPU Manager and Topology Manager of Kubernetes are still unable to understand the complex internal architecture of server-level hardware and the capabilities of the CPU itself, which may lead to CPU resource allocation decisions and computational performance not being optimized. For Intel® Xeon® Scalable processors, with their complex and powerful architecture, if you want to deploy Kubernetes clusters on them to efficiently support cloud business, you need to expose their topology and CPU power to Kubernetes clusters, and that's when CRI-RM was born.

Thanks to the tireless efforts of Intel's R&D team, Intel® CRI-RM now enables CPUs to be more powerful in AI scenarios. The goal is to adapt the features of the Intel platform to the Kubernetes cluster environment.